Data preprocessing techniques for small data in materials science include data cleaning, normalization, and feature scaling. These techniques are crucial to ensure the quality and reliability of the small dataset before applying machine learning algorithms for analysis.

In materials science, where data may be limited, these preprocessing techniques help in optimizing the performance of machine learning models and enhancing the accuracy of predictions. By understanding and implementing these techniques, researchers can make the most out of the available small datasets and extract meaningful insights that contribute to advancements in materials science.

Proper data preprocessing lays the foundation for successful machine learning applications and allows for more accurate and robust results in materials science research.

Understanding Small Data Machine Learning

Importance Of Small Data In Materials Science

In materials science, small datasets often contain valuable information that can be used to make significant advancements. These datasets may come from expensive experiments, limited samples, or rare natural occurrences. Despite their size, small datasets can provide critical insights into material properties, which is essential for developing innovative materials for various applications. Leveraging small data machine learning techniques in materials science can unlock the potential of these limited datasets, enabling researchers and engineers to make informed decisions and drive breakthroughs in material development.

Challenges Of Applying Machine Learning On Small Data

Applying machine learning to small datasets in materials science comes with its own set of challenges. Limited sample sizes may lead to overfitting or poor generalization of predictive models. Noise and variability in small datasets can also impact the accuracy and reliability of machine learning algorithms. Furthermore, feature engineering and data preprocessing become crucial in small data machine learning to ensure the quality and relevance of input features. Overcoming these challenges demands a deep understanding of data preprocessing techniques tailored to small datasets in materials science, allowing for meaningful and interpretable results.

Data Collection And Cleaning

In the domain of materials science, data preprocessing is a critical precursor to the successful application of small data machine learning techniques. This involves the meticulous handling of data collection and cleaning, ensuring that the raw data is transformed into a comprehensive and usable dataset. Below, we delve into key strategies for data collection and cleaning in materials science.

Identification Of Relevant Data Sources

In the initial stage of data preprocessing, it is imperative to identify the relevant data sources for materials science research. This involves collating data from scientific literature, experimental records, and databases such as Materials Project, Citrination, and AFLOW, among others. The data should be structured and tagged appropriately to ensure the integrity and relevance of the dataset.

Removing Noise And Outliers In Data

One of the fundamental tasks in data cleaning is the removal of noise and outliers, which can significantly impact the accuracy of machine learning models. The identification and elimination of irrelevant or erroneous data points are essential to maintain the quality of the dataset. Techniques such as statistical analysis and visualization are employed to detect and filter out these anomalies, enabling the construction of a robust dataset for subsequent machine learning analysis.

Feature Engineering And Selection

In machine learning, feature engineering and selection play a crucial role in refining the data preprocessing stage, especially for small datasets in materials science. Effective feature engineering transforms raw data into informative features, while feature selection methods help in choosing the most relevant features for the model.

Transforming Raw Data Into Informative Features

Transforming raw data into informative features involves converting the available data into a format that best represents the underlying patterns and relationships. This process may include:

- Normalization and scaling to standardize the feature values.

- Creation of new features through mathematical operations or domain knowledge.

- Handling missing data through imputation or exclusion.

- One-hot encoding of categorical variables to make them suitable for machine learning algorithms.

Methods For Selecting The Most Relevant Features

Once the features are engineered, selecting the most relevant ones becomes essential for model performance and interpretability. Various methods can be utilized for this purpose:

- Statistical techniques such as univariate feature selection based on statistical tests like ANOVA or mutual information.

- Wrapper methods like recursive feature elimination that select optimal feature subsets by iterating through different combinations.

- Embedded methods, where feature selection is integrated into the model training process, such as LASSO regression for regularization.

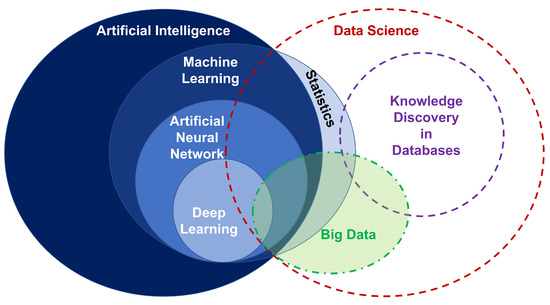

Credit: www.mdpi.com

Imputation Techniques For Missing Data

Handling missing data is a crucial aspect of data preprocessing in machine learning, especially when working with small datasets in materials science. Imputation techniques play a key role in filling in missing values and ensuring the integrity of the dataset. Employing the right strategies for handling missing data is essential for accurate and reliable machine learning models.

Strategies For Handling Missing Data

Missing data is a common issue in small datasets, and it can significantly impact the performance of machine learning models. Several strategies can be employed to handle missing data effectively, including:

- Identification and analysis of missing data patterns

- Deletion of records with missing values

- Imputation of missing values using statistical measures

- Utilizing machine learning algorithms for imputation

Imputation Techniques And Best Practices

Imputation techniques involve filling in missing data with estimated values, and several methods are commonly used to achieve this. It’s important to adhere to best practices to ensure the accuracy and reliability of imputed data. Some popular imputation techniques and best practices include:

- Mean, median, or mode imputation

- Linear regression imputation

- K-nearest neighbors (KNN) imputation

- Multiple imputation methods

Choosing the most suitable imputation technique depends on the nature of the missing data and the characteristics of the dataset. It’s crucial to assess the impact of imputation on the overall performance of the machine learning model and to validate the chosen imputation method to ensure the robustness of the results.

Data Scaling And Normalization

Data Scaling and Normalization are essential data preprocessing techniques in small data machine learning for materials science. In the pursuit of achieving optimal model performance, it is crucial to understand the importance of scaling and normalizing data, as well as the various techniques available for standardizing data.

Importance Of Scaling And Normalizing Data

Scaling and normalizing data are critical steps in data preprocessing for small data machine learning in materials science. Scaling ensures that all features contribute equally to the analysis by bringing them to a similar scale, preventing features with larger ranges from dominating the model training process. On the other hand, normalization is vital for dealing with features that may have different units or distributions, making it easier for the model to learn the underlying patterns without being biased by the magnitudes of the features.

Techniques For Standardizing Data

There are several techniques commonly used for standardizing data:

- Z-score normalization

- Min-max scaling

- Robust scaling

- Unit vector normalization

Handling Categorical Data

When working with small data in machine learning for materials science, handling categorical data is a crucial aspect of data preprocessing. Categorical variables, which represent qualitative data, often require specific techniques to ensure their correct incorporation into machine learning models. In this article, we will explore various approaches for handling categorical data in the context of small data machine learning for materials science.

Approaches For Encoding Categorical Variables

Encoding categorical variables is essential for machine learning models to process qualitative data effectively. There are several approaches to encoding categorical variables:

- Label Encoding: Assigning a unique numeric label to each category in the variable.

- One-Hot Encoding: Creating binary columns for each category, representing their presence or absence in the data.

- Target Encoding: Using the target variable to encode categorical features based on the mean of the target for each category.

Dealing With Imbalanced Categorical Data

Dealing with imbalanced categorical data is crucial to prevent bias in machine learning models. Here are some techniques to address imbalanced categorical data:

- Over-sampling: Generating synthetic samples for minority categories to balance the distribution.

- Under-sampling: Reducing the number of majority category samples to balance the distribution.

- SMOTE (Synthetic Minority Over-sampling Technique): Creating synthetic samples for the minority class based on k-nearest neighbors.

Data Augmentation For Small Datasets

When dealing with small datasets in machine learning for materials science, data augmentation plays a crucial role in improving model performance. Data augmentation involves generating new, synthetic data points from existing ones, helping to expand the size and diversity of the dataset. In this section, we’ll explore various methods for generating synthetic data and best practices for implementing data augmentation in small data machine learning.

Methods For Generating Synthetic Data

There are several methods for generating synthetic data to augment small datasets in materials science machine learning. These methods include:

- Image manipulations: Rotating, flipping, zooming, and adjusting brightness of images to create variations.

- Text data augmentation: Using techniques such as synonym replacement, sentence shuffling, and insertion of spelling errors to generate diverse textual data.

- Noise addition: Introducing random noise to numerical data to create variations in the feature space.

- Generative Adversarial Networks (GANs): Using GANs to generate realistic synthetic data that closely resembles the original dataset.

Best Practices For Data Augmentation In Small Data Ml

When applying data augmentation techniques to small datasets in materials science machine learning, it’s essential to follow best practices to ensure effectiveness and reliability. Some best practices include:

- Understanding domain-specific characteristics: Tailoring data augmentation methods to reflect the intrinsic properties of materials science data.

- Validation and testing: Ensuring that the augmented data does not compromise the integrity of the original dataset through rigorous validation and testing.

- Consistency in augmentation: Applying consistent augmentation techniques across the entire dataset to maintain coherence and generalization capability of the model.

- Regularizing augmentation: Using regularization techniques to prevent overfitting when using augmented data for training.

Dimensionality Reduction

Dimensionality Reduction is a critical step in the machine learning workflow for materials science, especially when dealing with small data sets. It involves the process of reducing the number of random variables under consideration by obtaining a set of principal variables. By reducing the dimensionality of the input data, this technique helps in overcoming the curse of dimensionality, improving model performance, and decreasing computational costs.

Principal Component Analysis (pca) For Small Data

Principal Component Analysis (PCA) is a widely used method for dimensionality reduction, particularly when dealing with small data. It works by transforming the input data into a new coordinate system to identify patterns and re-express the data in terms of these patterns, known as principal components. PCA is particularly useful for identifying the most significant aspects of the data, and it can effectively reduce the number of dimensions while retaining most of the initial variance.

Techniques For Reducing Dimensionality Without Losing Information

While PCA is effective, there are other techniques available for reducing dimensionality without losing essential information. These methods are particularly useful for small data machine learning in materials science, where preserving as much information as possible from the original dataset is crucial for accurate model training and predictions.

Cross-validation And Model Evaluation

Data preprocessing and model evaluation play a crucial role in building robust machine learning (ML) models, especially when dealing with small datasets in materials science. In this section, we will explore the strategies for evaluating ML models in small data and the significance of cross-validation techniques in ensuring robust model performance. Let’s delve into the world of cross-validation and model evaluation techniques for small data machine learning applications in materials science.

Strategies For Evaluating Ml Models In Small Data

When dealing with small datasets in materials science, the evaluation of ML models becomes paramount. Evaluating ML models in small data requires careful consideration of various factors to ensure the reliability and robustness of the models. By employing appropriate strategies, researchers can effectively assess the performance of ML models and make informed decisions about model selection and optimization.

Cross-validation Techniques For Robust Model Performance

Cross-validation is an essential technique for evaluating the performance of ML models, especially in scenarios with limited data. This approach involves partitioning the dataset into subsets, training the model on a portion of the data, and then evaluating its performance on the remaining data. By repeating this process with different partitions, researchers can obtain a more reliable estimate of the model’s performance and generalizability. Several cross-validation techniques such as k-fold and leave-one-out cross-validation are commonly used to ensure robust model performance in small data settings.

Conclusion

Data preprocessing plays a crucial role in small data machine learning for materials science applications. By addressing issues such as missing data, outliers, and noise, these techniques improve model accuracy and reliability. As researchers continue to explore innovative methods, refining data preprocessing will be essential for advancing machine learning in materials science.